Many body DPD on DL_MESO_DPD multi-GPU¶

This module implements the many body DPD algorithm on the multi-GPU version of DL_MESO_DPD.

Purpose of Module¶

One of the main weak points of the DPD simulation is in its equation of state. For example, compressible gas or vapor liquid mixtures are difficult, if not impossible, to be simulated correctly. The many-body DPD approach allows us to overcome this limitation by extending the potential of neighbour beads to a large cut off radius.

This module consists in the implementation of many-body DPD on the multi GPU version of DL_MESO_DPD. The new feature will allow us to simulate complex systems liquid drops, phase interactions, etc.

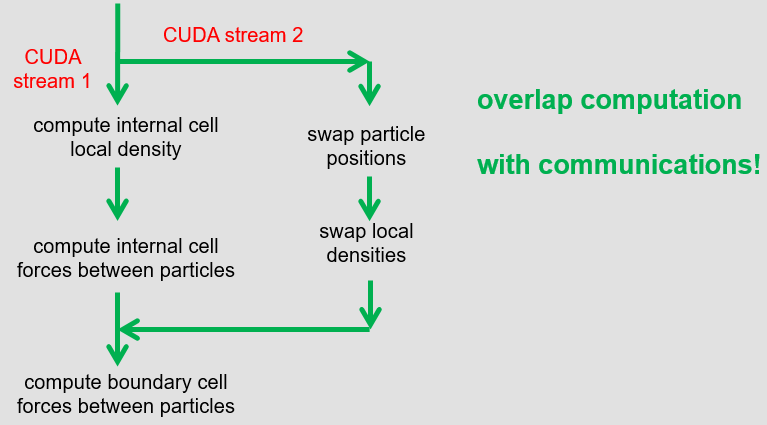

From an implementation point of view, the algorithm requires us to first loop over the internal cells of a typical domain to calculate the local densities, followed by a second loop to find the forces acting between particles. To achieve good scaling across multiple GPUs, we must allow the overlap of the computation of local densities and forces with the swapping of the particle’s positions and local densities. This is achieved with a partial sum of the forces based on the internal particles first and then adding the forces from the border particles later. A flowchart of the algorithm is presented in the figure below.

Background Information¶

This module is part of the DL_MESO_DPD code. Full support and documentation is available at:

To download the DL_MESO_DPD code you need to register at https://gitlab.stfc.ac.uk. Please contact Dr. Micheal Seaton at Daresbury Laboratory (STFC) for further details.

Building and Testing¶

The DL_MESO code is developed using git version control. Currently,

the multi GPU version is under a branch named multi_GPU_version. After downloading the code,

checkout the GPU branch and look into the DPD/gpu_version folder, i.e:

git clone https://gitlab.stfc.ac.uk/dl_meso.git

cd dl_meso

git checkout multi_GPU_version

cd ./DPD/gpu_version/bin

To compile and run the code you need to have installed the CUDA-toolkit (>=8.0)

and have a CUDA enabled GPU device (see http://docs.nvidia.com/cuda/#axzz4ZPtFifjw).

For the MPI library, OpenMPI 3.1.0 has been used. Install hwloc if you want

to set the GPU affinity between devices and CPU cores, otherwise remove the

-DHWLOC flag in the Makefile.

Finally, you need to install the ALL library and make sure the ALL path is set correctly. ALL handles the load balancing among the GPUs, for details see Load balancing for multi-GPU DL_MESO.

Use make all to compile, resulting in the executable dpd_gpu.exe.

A testloop is added in the tests folder. Type ./Tesloop_All

followed by option 2 to and specify 8 as number of GPUs.

Verify the results with option 3.

No difference should appear in the statistical values and final stress

values (the final printed positions are randomly particles chosen

and can be different at every run).

For the current module, the test/SurfaceDrop test case is a

good example of combining manybody DPD and load balanced as

presented in Load balancing for multi-GPU DL_MESO.

Below is an snapshot from the simulation based on the

same input (but large system) using 8 GPUs and for 35k time steps.

Source Code¶

This module has been merged into DL_MESO code. It is composed of the following commits (you need to be registered as collaborator):